Unit tests help developers write better code and provide a faster way of getting feedback compared to testing manually. But unit tests are also another piece of code that must be maintained and taken care of. Unit tests can become a mess just like production code can. Here are some tips on how to improve your tests and avoid such a situation.

Keep Your Tests Small

Unit tests are supposed to test a small unit of code. What you understand as a "unit of code" is up to you and your team. Is it a method, class, or module? The discussion is unnecessary as long as you agree with your team and as long as your test stays relatively small.

A test that comprises 400 lines of code is hard to read and is probably doing too much. It might also point to a class or method that's doing too much, i.e. you could be violating the single responsibility principle. Such tests are hard to read and difficult to maintain. If something changes in the code that you're testing, you might break the test and have a hard time fixing it. You'll have to go through all those lines of code, trying to create a mental picture of everything that's going on.

Split up larger tests into smaller parts. If it requires you to refactor the production code into smaller parts too, that's actually a win. Both pieces of code will then be easier to read and easier to maintain or change.

Avoid Test Inheritance

Often, several tests share the same piece of setup code. For example, you might need a customer object in multiple tests. A solution I've often seen is to have a base class of which other test classes can inherit. Something like this:

[TestFixture]

public class Given_A_Customer

{

protected Customer Customer { get; set; }

public Given_A_Customer()

{

this.Customer = ...

}

}

This assumes that you split up your unit tests into several classes instead of methods. Both are a fine approach with pros and cons. But using inheritance like the above example leads to classes like this:

- Given_A_Customer_With_Gold_Status

- Given_A_Customer_With_Silver_Status

- Given_A_Customer_With_Gold_Status_And_Older_Than_60

- Given_A_Customer_With_Silver_Status_And_Older_Then_60

- Given_A_Customer_Older_Than_60

Each test inherits from the base class, but some will inherit through an intermediary class. For example, "Given_A_Customer_With_Gold_Status_And_Older_Than_60" will inherit from "Given_A_Customer_With_Gold_Status" and "Given_A_Customer_With_Silver_Status_And_Older_Then_60" will inherit from "Given_A_Customer_With_Silver_Status." Are you starting to see the problem here? Even though the "Older_Than_60" classes will share some similar code, they can't inherit from a base class that sets up a customer that's older than 60. This is because they already inherit from separate classes (one "gold customer" and one "silver customer") and most languages don't allow multiple inheritance.

Also, if you take this approach, your test logic is spread out through multiple inheriting classes. If you need to see what's going on, you have to navigate to all these files and remember what you've read.

The solution? Composition over inheritance. Just as it's a good idea in your production code, so is it in your tests. Don't let tests inherit from each other. If you need similar pieces of setup, put the shared logic in a separate class and use that class in your tests. In the above examples, we could write a TestCustomerBuilder class that we can use to pass certain parameters to set up a customer that has a certain status and age. This leads to cleaner and more flexible tests.

Use Code Coverage To Your Advantage

Ah, code coverage. There have been many discussions about it. The industry seems to have accepted that 100% code coverage is probably a silly idea, but then how much code coverage is enough? It really depends on your project. If you're writing a public SDK that your customers use, you'll want a fairly high percentage of code coverage because bugs will harm your business. If you're writing a non-critical line-of-business application, your tests will serve more to drive your design than to catch bugs. We've touched on this subject at length before.

But it's a good practice to have at least insight into how much code your tests are covering. Many development teams don't really know, or they don't know which pieces of the application have low or high code coverage.

Visualizing your code coverage metrics is a useful way of seeing where you should focus. Critical parts of the application will require a higher percentage of code coverage than the non-critical parts.

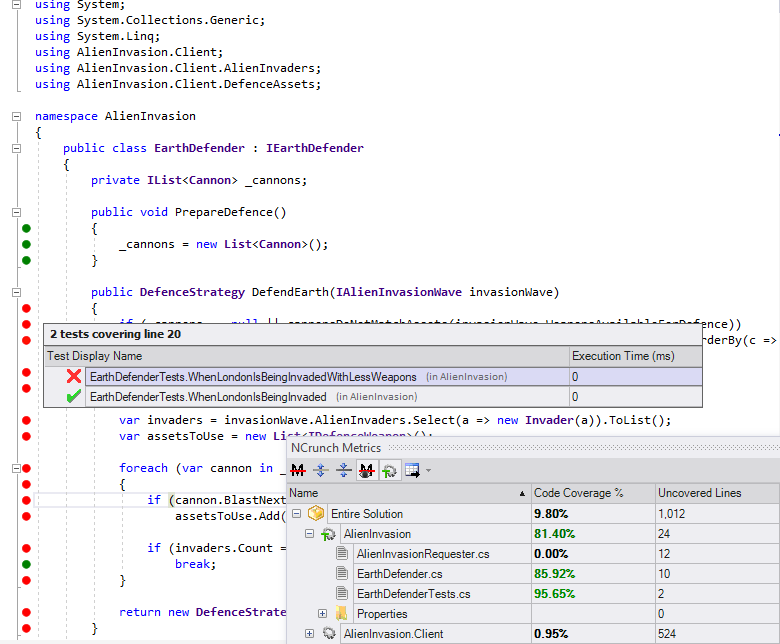

NCrunch is a tool that can provide such code coverage. In the screenshot below you can see what it looks like:

You can see that we can navigate from a single line of code to the tests that cover this line. And we can see that one of these tests is failing. There is also an overview of all test coverage in the solution.

Use code coverage to identify problematic areas and not to reach some arbitrary goal.

Performance

The subject of how fast your tests should run is something we've mentioned before too. Like code coverage, there's no exact answer to this question. But if you read the linked article, you'll see how we come to a frightening conclusion: they should be extremely fast—"seconds-fast," not "minutes-fast."

This is often practically impossible, which is why various techniques exist to improve the situation. The post I mentioned above goes into more detail of how you can increase the speed of your tests. To summarize, you can

- refactor slow running code

- refactor the application into smaller applications or microservices

- run your tests in parallel

- run them continuously

- offload the test run

The last two points deserve some extra words.

Traditionally, developers will switch between writing code (both tests and application code) and running the tests. While running the tests, they're waiting—waiting for the compilation and then waiting for the tests to finish. Having a tool like NCrunch to run your tests continuously allows you to just write code and see the test results as updates from NCrunch come in. This improves the process of writing code more than you think. You no longer have to look at a spinner or progress bar. Just continue writing code, and you'll see the results as you change your code. This is especially useful if you're in the habit of running your tests regularly between changing code, which you should do.

Another interesting technique to improve your test performance is to offload the test run to other computers. When you have hundreds of tests, this allows you to distribute them among a grid of machines. This is what NCrunch calls distributed processing.

Keep Them Deterministic

This is probably a no-brainer for most readers. But it makes sense to revisit this principle. The idea is to have your tests always produce the same output if there are no code changes. Integration and end-to-end tests could be forgiven for violating this rule, as they often have more moving parts. But unit tests should be at a small enough level that they always behave identically across different test runs. If they don't, you'll have a hard time finding and fixing bugs.

Isolate Your Tests From Each Other

Here's another no-brainer for most of you. Tests should be isolated from each other. If they need a similar set-up or clean up, perform that separately for each test. You want to be able to run the entire test suite, just a selection of tests, or a single test without having to worry about needing to run other tests first.

Also, if tests have side-effects on other tests, you might make them non-deterministic. This, in turn, makes it difficult and frustrating to fix broken tests, especially if they sometimes pass and sometimes don't.

Make Your Tests Readable

We should make an effort to keep our production code readable. It makes our and our colleagues' lives easier later. The same goes for unit tests. Give them clear class, file, or method names. Consider using a library like Fluent Assertions or NFluent. Anyone reading the test later (including you!) will thank you for it.

What Do You Do?

Keep in mind that unit tests should be treated like any other piece of code. After all, we're constantly running them and constantly working in and with them. If we don't treat them well, it'll only lead to frustration and faulty software.

These were my main tips to improve your unit tests. Did I miss anything? Or do you have any special ways of improving your tests? Let us know in the comments.

This post was written by Peter Morlion. Peter is a passionate programmer that helps people and companies improve the quality of their code, especially in legacy codebases. He firmly believes that industry best practices are invaluable when working towards this goal, and his specialties include TDD, DI, and SOLID principles.